ACT Response to the EC Call for contribution on competition in virtual worlds and generative AI

Download the document

INTRODUCTION

The AV sector has been at the forefront of technological transformation since its genesis, with advances enhancing the creative process and ensuing distribution that delivers content to audiences everywhere.

The current wave of emerging technologies, driven by AI, advanced algorithms, and machine learning models, presents opportunities, challenges, and considerations for creative industries. Questions around ethics, cybersecurity, copyright enforcement, and how to tackle disinformation and hate speech are increasingly prominent in the online sphere and necessitate thorough reflection. Using these technologies to enhance creativity, whilst ensuring that human beings remain at the heart of the creative process will be essential.

ACT members are already making use of AI technology, from post-production (for example visual effects; de-aging; colour adjustment; etc.) to marketing, archive management or even entirely new bespoke interactive experiences for audiences. The ACT approach to AI is based on our respective positions and principles towards Intellectual Property, Media, Privacy and Data law.

In this landscape, AI developers leverage services from major companies to fulfil critical functions, while AI deployers, lacking internal AI expertise, acquire solutions through partnerships or subsidiaries of gatekeepers. The evolving dynamics of AI adoption and monetisation highlight the complex interplay between industry players and regulatory frameworks in shaping the future of AI in creative industries1.

The rise of AI will have fundamental implications for all industries, including the audio-visual sector. For commercial broadcasters, it will impact the creative, production, investment, and distribution processes. At this early stage of AI’s integration across the entire AV value chain we look forward to contributing to how to make sure regulatory frameworks are future-proof and technologically neutral, ensuring flexibility while upholding fundamental principles of copyright, contractual freedom, and investment drivers.

The existing legal and technical mechanisms aimed at preventing the unauthorized use of copyrighted content places the burden of proving abuse on rights holders. In the context of AI, this is a nearly impossible task due to the opaque nature of the way that AI providers gather and use training data. Looking forward, guidance from the AI office on the practical application of the opt-out and effective guidelines on transparency will be essential.

Regarding the Digital Markets Act (DMA), we would recommend that the European Commission thoroughly evaluates whether Generative AI services, owned and operated by gatekeeper platforms such as Gemini (Alphabet), Llama 2 (Meta), or the forthcoming “AppleGPT” (Apple), may constitute as core platform services (CPS).

Similarly pertinent to the DMA, an evaluation may be necessary how ‘traditional’ CPS of gatekeepers evolve with integration and utilization of AI. Noteworthy services in this context include Google

1 Jacobides, M.G., et al. “The evolution Dynamics of the Artificial Intelligence Ecosystem” (2021) Strategy Science 6(4): 412-435, p. 418.

Search (Alphabet, search engine), Bing (Microsoft, search engine), and AI Sandbow (Meta, advertising services). DMA provisions, such as those concerning self-preferencing, should be viewed in that light.

QUESTIONS

1) What are the main components (i.e., inputs) necessary to build, train, deploy and distribute generative AI systems? Please explain the importance of these components

Diverse and high-quality data is at the core of effective AI systems. Depending on the output of a given GAI system, ingested data may be in the form of text, audio, images, video, etc. or a combination thereof. This data comes from a number of sources, ranging from proprietary data bases to internet crawling. It is curated into data sets and used to train GAI systems. Given the nature of the data required to train system, AV content created by ACT members provides important source material. Whilst the 2019 Copyright Directive’s TDM exemption allows rights holders to opt out of their works being used in this manner, it appears that this right is sometimes ignored by certain AI systems. This means that rightsholders are not consistently receiving compensation when their content is used in training these GAI systems. It will be important that the AI Office provide clear guidance on this matter to ensure, amongst other to ensure that competition is not being distorted, for example if the systematic infringement of rights during ingestion and training enables certain GAI system to become dominant.

3) What are the main drivers of competition (i.e., the elements that make a company a successful player) for the provision, distribution or integration of generative AI systems and/or components, including AI models?

Per our answer to question one, access to diverse and high-quality data is a key driver of competition in this space. Whilst rightsholders such as content providers are provided with an opt-out for their works for text and data mining purposes, this right must be respected by providers to ensure transparent and fair competition. Infringement of copyright law is one of the factors which may lead to unfair dominance in the market. Transparency around ingestion is a first step to address this, and it will be important that the rules under the AI Act on “sufficiently detailed summaries” are implemented effectively to (such as the ones provided.

Additionally, AI providers, which are often part of large gatekeeper platforms, can further increase their market power through the output generated, so that they can create a competing product based on the content of rightsholders.

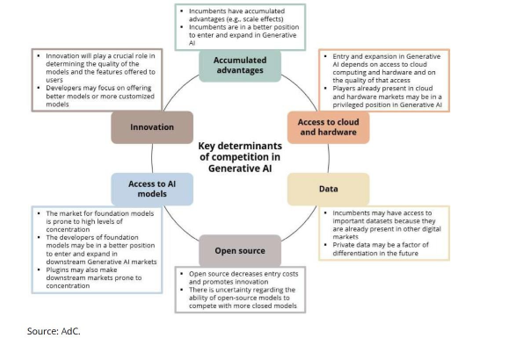

Especially for the AV sector there needs to be access to services by major tech companies. If those block third parties and e.g. use generative AI-tools only within their own offerings this would pose a huge risk and disadvantage to the AV and content industry.In November of last year, the Portuguese Competition Authority, Autoridade da Concorrenza (The “AdC”) published a comprehensive paper on the risks of AI in Competition. The following infographic included in the study summarises the main key determinants of competition in generative AI:

4) Which competition issues will likely emerge for the provision, distribution or integrationof generative AI systems and/or components, including AI models? Please indicate to which components they relate.

From the standpoint of content providers, the practical application of protections under the current copyright framework, such as opting out from the TDM exception in a machine-readable way, provides some answers. However, there are increasing concerns that rights holders face challenges in engaging with AI providers in the value creation process. There is uncertainty about whether opting out could result in disadvantages in search engine indexing. It’s crucial to clarify that reserving rights for text and data mining shouldn’t lead to disadvantages in search engine indexing (prohibiting tying). In addition to the opt-out mechanism and transparency, the latter could be one which the AI office provides guidelines/clarifications on.

7) What is the role of data and what are its relevant characteristics for the provision of generative AI systems and/or components, including AI models?

The role of data in the provision of generative AI systems and components, including AI models, is paramount and deeply intertwined with their development, deployment, and impact on various industries. Big data, in particular, plays a central role in the advancement of AI technologies, enabling the processing of vast amounts of information that are essential for training and fine-tuning generative AI models.

Generative AI also raises a host of other issues related to privacy, security, and intellectual property. Concerns about copyright infringement2 arise from the use of copyrighted works as training data3. Manipulation risks emerge when training data is tweaked to influence the responses of generative AI models, leading to concerns about the reliability and security of these systems.

2 Recent cases have highlighted this issue, like the one in the US between the New York times and OpenAI.

3 AdC p. 21

While the development of AI technologies presents opportunities for innovation and advancement, it must not come at the expense of infringing on the exclusive rights of copyright holders. Balancing the benefits of AI with respect for intellectual property rights and privacy considerations is essential for fostering a sustainable and ethical AI ecosystem.

11) Do you expect the emergence of generative AI systems and/or components, including AI models to trigger the need to adapt EU legal antitrust concepts?

The dominance of gatekeepers in the market remains substantial. Their search engines and AI services benefit greatly from network effects and could further consolidate their market control, hindering fair competition. Similarly to the way search engines, ad tech, and online marketplaces prompted specific regulatory responses, AI business models may present distinct challenges. Hence, there’s a strong rationale for close monitoring by the European Commission, by looking further into this realm at EU level in line with various countries such as Portugal, France and the UK.

12) Do you expect the emergence of generative AI systems to trigger the need to adapt EU antitrust investigation tools and practices?

Competition law, alongside the Digital Markets Act (DMA) and national legislation, must evolve in response to advancements in AI technology. It’s essential to recognize the possibility that the most potent AI models will likely be developed and controlled by the existing gatekeepers. Specifically, we anticipate encountering similar issues to those observed in various digital markets currently under the scrutiny of the DMA. In these cases, competition procedures have often been slow in rectifying the situation. Consequently, markets have already tipped, and competitors have been compelled to exit before investigations could reach completion. This should be avoided in the development of next generation technology such as AI.